Regression Testing

A superset of smoke and sanity testing, regression testing is usually performed when testers have ample time. It is an automated process where after adding new functionalities, a detailed targeting of all the affected areas is done and it’s an emphasis on already existing features. Regression testing is executed after making some significant changes in the software build. In order to verify the bugs and other changes in the requirements, some form of testing needs to be done and that is where you can perform this concept of testing. It is conducted after sanity testing of the changed functionalities, where all the impacted features of an application are put through thorough testing which leads to Quality Assurance and related functionalities. It is done only by the Quality Assurance team.

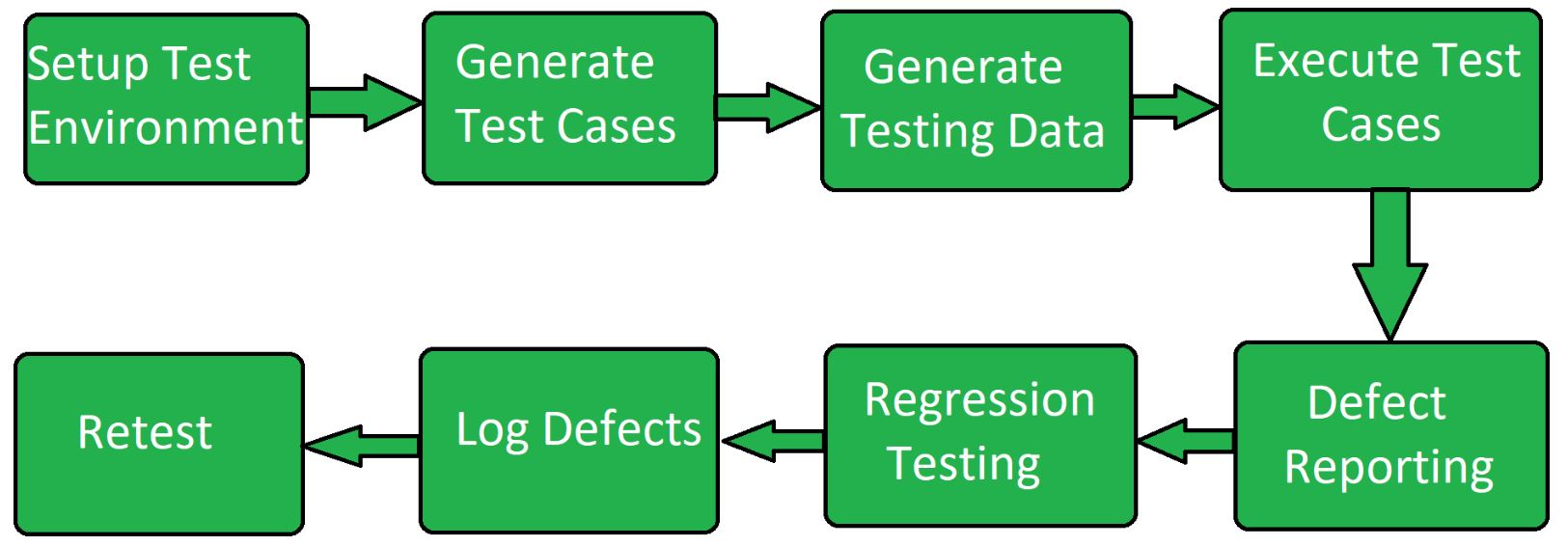

Regression testing needs to be done whenever there is a requirement to change the code and it needs to be tested whether it is functioning properly or not. Also, when any other new feature is incorporated in the software or application, defects and performances have to be tested. Optimizations, deleting existing features, correction of errors, and enhancements are key aspects of regression testing.

The process of regression testing can easily be understood with the help of a simple example where you have given a messaging application to test. This messaging application has features like sending and receiving texts and making phone and video calls. The developers have added a new feature of making payments online and also made changes in the phone call option where you can talk to multiple people at once. The impact of these changes on other functionalities and the overall working of the application has to be tested, this is called regression testing.

Smoke Testing

With the main aim to reject any defective software build, smoke testing makes sure that the Quality Assurance team can directly work on the issue, rather than wasting time on installing and testing the software or the application. Generally performed during the initial stages of the Software Development Life Cycle (SDLC), this testing makes sure that the core/main features of an application are working without any predicament. The primary purpose of smoke testing is not to perform deep testing but to make sure that the core functionalities are working seamlessly. This process is done before any other detailed tests are done.

Also known as the build verification test, smoke testing magnifies issues in critical areas rather than the complete application. Smoke testing is done not only by testers but also by developers. A subset of a rigorous testing process, smoke testing uses test cases to cover important components of the build. There is no time-consuming exhaustive tests however, only verification that crucial elements are working properly. It is supposed to be performed only when the developers provide the Quality Assurance team with a novel and a fresh build. A fresh build means software that has new changes incorporated in it. One can also perform smoke testing when a new module is included in an already present functionality.

It should be done for every new release and every new build in the software if it requires to be done on a daily basis. Once your build is stationary and stable, you can also go in for an automated smoke test. A smoke test is critical since it prevents any broken and unstable build and simultaneously helps to find any integration issues much faster. It makes rectifications and detection an easy process and provides confidence to the tester to proceed with the other stages of testing. Along with the features of the build, overall performance, security, and privacy policy can also be tested.

Let’s continue with the same example where you have been given a messaging application to smoke test. The key aspects of this application would be composing a message, sending it, and receiving messages and if the messages are not sent, other functions like uploading status, seeing the status, changing profile picture, etc. will make sense. This simply means that you will have to drop the software build without any other process since the core functionalities do not work. This is called smoke testing.

Sanity Testing

Also known as Surface Level Testing, sanity testing simply decides if the software build is received after many regressions and it is good and stationary enough to pass it to the next stage/level of testing. When a new functionality or a new module is added to any software/ application, sanity testing needs to be done; it is a process where a quick evaluation is done of the quality of the software that has to be released and also to figure out whether it is eligible for the next stage of testing or not. When minor changes are made in the code or the functionality of the build, sanity testing is done since it further decides whether end to end testing of the build should be carried out or not.

In order to verify and validate the compliance of the newly added modules, functions, and features, sanity testing should be carried out. This process also ensures that the changes that have been introduced do not have an impact on the other functionalities of the product. When the software is received after fixing the bugs or just before the deployment, sanity testing should be done.

Sanity testing in QA is a part of regression test. Failure in sanity testing leads to a complete rejection of the build to save time and money. Sanity testing is performed only after it has completed the smoke test and has been accepted for further stages of testing by the quality assurance team. The primary focus of the team is to validate the functionality of the build by not doing a detailed test. In this test, we do not check the entire functionality of the application instead we figure out if the developer has used some form of intellect and logic (sanity) while developing this software.

Usually, in other forms of testing, there is a hard and fast rule when it comes to the actual process of testing but sanity testing has a different way. It is not bound by a certain set of rules. Sanity test is rapid and speedy and to make it even more productive, the QA engineers usually don’t script the test cases. The core objective is to make sure that the false results or the bugs are not in the component processes.

Three phases have to be carried out to perform sanity testing:

Identification – This step involves the process of identifying the new modules, features, and functionalities. Along with this, the tester also has to keep an eye on the changes or modifications that might have been introduced in the code when bugs were getting fixed.

Evaluating- This step involves the checking of the newly implemented features and to check whether they are working as intended or not.

Testing- The last step involves a random check of all the parameters, elements, and functionalities.

If these three steps go faultlessly, the build can be passed for comprehensive testing. If we continue with the example of testing a text messaging application, and this time you have to perform a sanity test on it. After the process, you found out that instead of seeing the status of another person you are seeing their profile picture, then, there is no point in checking other advanced functionalities like making online payments, customizing stickers, having a conference video call, and so on because the basic features fail to work.

“Pointers to note”

While smoke testing has to verify stability and regression testing the impact, sanity testing has to verify the rationality in any software built.

Regression testing helps to enhance the quality of the product that is being deployed whereas smoke and sanity testing aids the Quality assurance team by saving their time and effort.

Smoke testing is performed at the initial stage whereas regression and sanity testing are performed towards the end in order to check the functionalities.

The quality Assurance team has to perform all three tests one after the other. All these tests have a defined number of test cases that have to execute numerous times. A smoke test is done first, which is followed by sanity testing, and then if time permits, regression testing is done.